We investigate how state-of-the-art computer vision research, machine learning, and artificial intelligence can drive new capabilities in basic physics, enhance nuclear nonproliferation and nuclear safety, and simplify operational tasks at nuclear and scientific facilities. Here are a few examples:

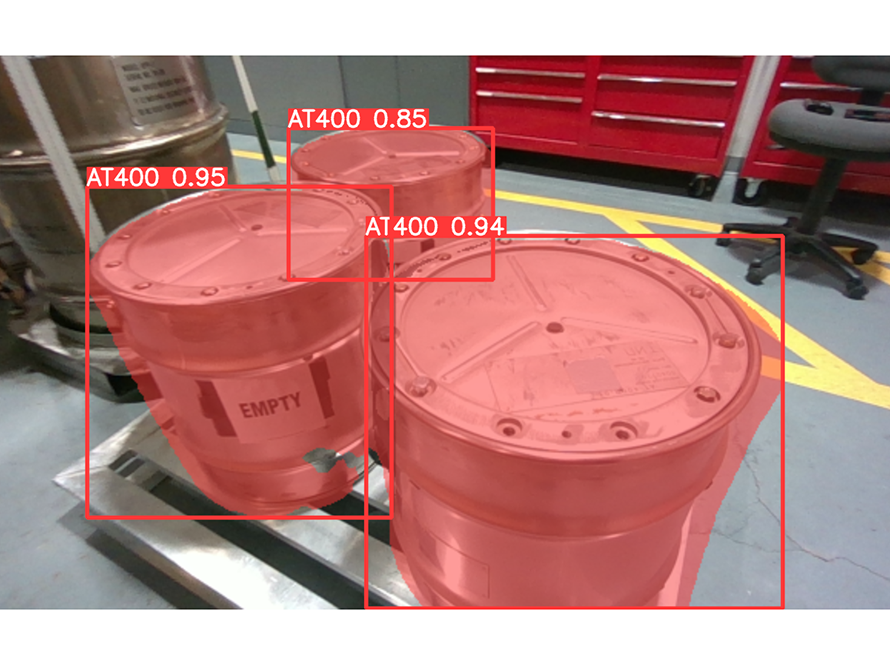

- Camera or lidar data have been processed with object detecting and/or segmenting neural networks. The results can be used for localizing and tracking objects of interest and detecting changes in a previously visited area.

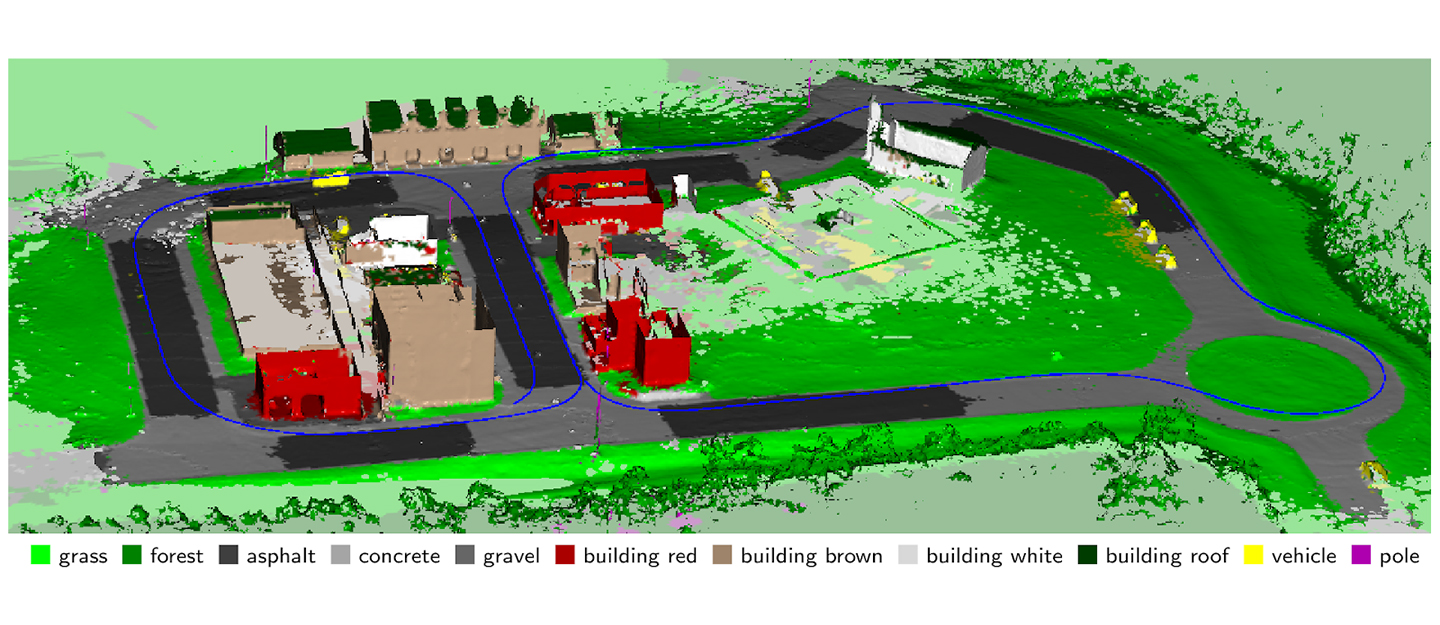

- Simultaneous Localization and Mapping (SLAM) techniques based on lidar, but also camera data, help to define the trajectory of a sensor system through an area and create a three dimensional representation of the area.

Nuclear material containers detected with a neural network (yolov8). The neural network produces both, bounding box annotations and semantic instance segmentation annotations, together with a confidence score and a class identification. The model is capable of differentiating between different container types that look relatively similar.

Adding radiation measurements or other sensor data allows to establish a more holistic understanding of a scene or facility and the distribution of nuclear materials in the area.

Example applications include:

- Creation of realistic models of emergency response scenes,

- Mapping of nuclear material containers in safeguard scenarios,

- Sensor networks in urban environments deployed for continuous monitoring for illicit movements of radioactive materials,

- Environmental modeling to better understand and predict backgrounds.

Additionally, we also collaborate with the 88 inch cyclotron facility at Berkeley Labs to learn how machine learning and artificial intelligence can help to automate the operation of scientific facilities.